To answer these questions, we used the students’ current attainment grades from teaching staff and ‘Approach to Learning’ (essentially effort) grades used to measure engagement with their learning. We compared this with the Standardised Age Scores and indicated grades provided by CAT4, as well as the student self-evaluation data provided by PASS.

Using this data in isolation without a deeper understanding of the context could potentially be misleading for the students’ outcomes. We therefore set up review meetings to discuss exactly what was happening with these students, what we were already doing to support them and what else we could do – which informed what we might be able to do next.

“ ”

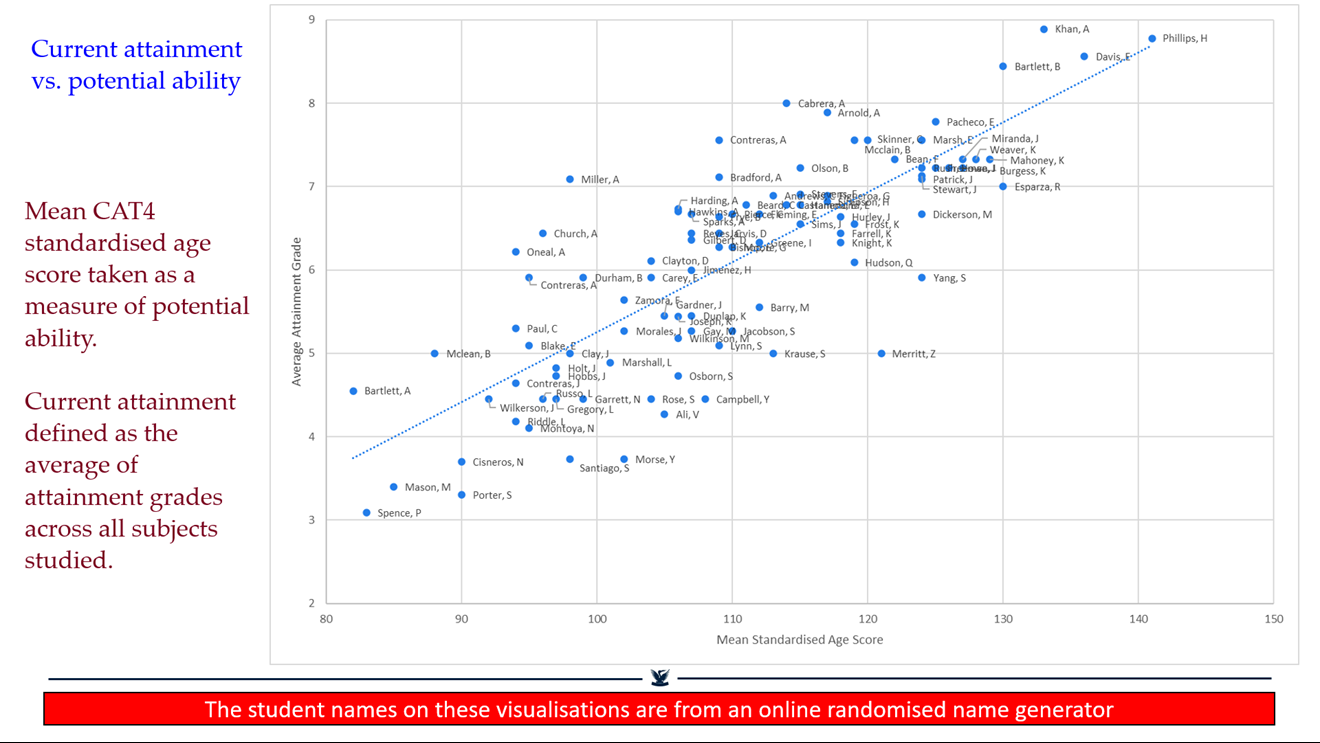

Current attainment vs potential ability

Fig 1 is an example profile of a year group, comparing the Standardised Age Scores from CAT4 with their average attainment grades. At a simplistic level, this told us that the most potentially able students were the ones attaining the higher grades (which illustrated to us that our teaching programme was working to some extent).

We could identify the students (above the green line) who were achieving attainment grades significantly above average for their particular abilities and should be rewarded for this. Perhaps most importantly, we could also identify the students (falling below the red line) who, for their abilities, were significantly below the mean for this cohort. These were the students that we needed to have more detailed conversations about.

If we were not looking at the data in this way, some of the students who were quite able but underperforming might have been missed. There may not have been anything fundamentally wrong with their grades, and they may not have been the lowest in the year group, however we knew that the students were achieving considerably below where they ought to be.

Understanding students’ engagement with learning

Administering PASS surveys with students across the school each term allows us to obtain a measure of each student’s self-perceived preparedness for learning and responses to curriculum demands. We also aggregate scores to consider students’ self-perceived academic competence and on contextual factors concerning aspects of a student’s learning.

To visualise the students’ PASS data, we plotted their results on a quadrant (see Fig 2) against the difference between their current attainment grades and their indicated grades provided by CAT4. If students were at zero on the quadrant they were meeting expectations and if they were above zero then they were exceeding expectations. With the PASS data we could then compare this with their self-perception, i.e. their engagement with their learning.

Ideally, we really wanted our students to be occupying the top-right corner of the quadrant – where they were perceived as engaging with their learning and exceeding expectations. In the top left, we had the students who were exceeding expectations but didn’t rate themselves as engaged with their learning – therefore we wanted to have conversations with those students and their teachers to find out why that might be the case (e.g. academic insecurity or pressure to be high-achieving).

In the bottom-right we had the students who were underperforming but rated themselves as engaged with their learning. These students would need a conversation to discuss what changes could be made to get where they needed to be. Then in the bottom-left we had the students that we really needed to be concerned about – they were struggling and knew that they were. We could therefore start putting interventions in place for these students such as academic mentoring.

Measuring the impact

Last year, we had conversations about 240 students in our review meetings. For around 30% of these students we were able to identify strategies that hadn’t already been put in place so that we could try something new for them. By the end of the year, 42% of those 240 students were no longer a cause for concern. This provided us with evidence of the impact of this data visualisation, which wouldn’t have been achievable without the additional insights provided by CAT4 and PASS.

“ ”